What is data labeling? Process, steps, and how Zweelie can help

Data labeling is the process of preparing structured, high-quality data so AI systems can learn from it. Raw text, audio, or other inputs are labeled with the right categories, intents, entities, or outcomes so a model can be trained or evaluated. Without labeled data, AI cannot reliably learn what "leasing inquiry" or "maintenance request" means, or which conversations represent revenue opportunity. With it, models get accurate, consistent, and improve over time.

This article explains what data labeling is, walks through the process of data labeling (the typical steps and why they matter), and describes how Zweelie can help: what Zweelie does, who it benefits, and what you gain from industry-specific data labeling instead of building it in house.

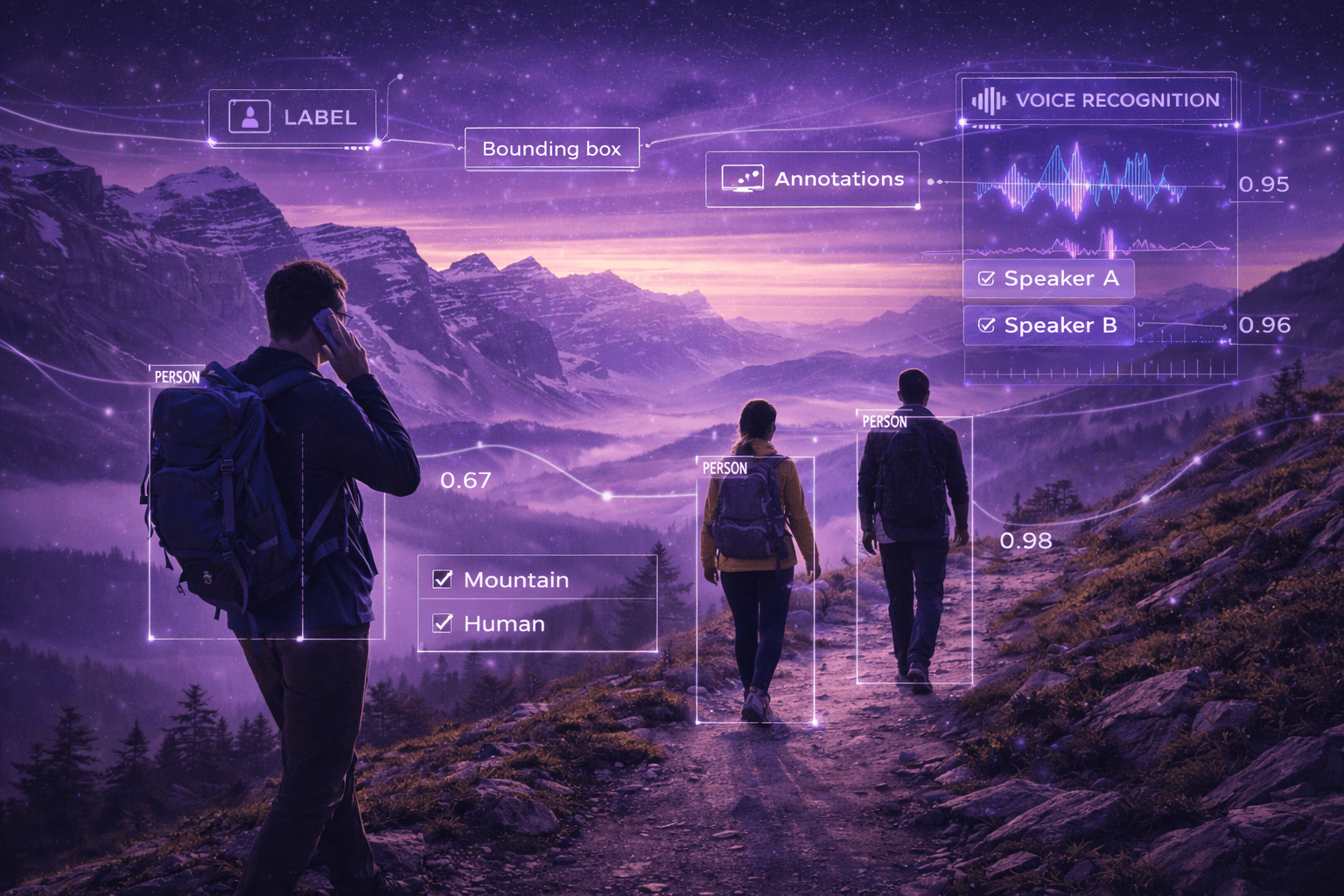

What is data labeling? Definition and why it matters

Data labeling (sometimes called data annotation or tagging) means attaching labels, categories, or structured information to raw data so that:

- Supervised models can learn from examples (e.g. "this phrase is a leasing intent," "this call is high value").

- Evaluation can measure how well a model performs (e.g. compared to human-labeled ground truth).

- Fine-tuning and improvement can happen over time as you add more labeled examples.

In practice, that often means labeling calls, transcripts, or messages with things like:

- Intent (e.g. sales, support, maintenance, billing, general question)

- Topic or category (e.g. availability, pricing, complaint, renewal)

- Entities (e.g. property name, product, dollar amount)

- Outcome or value (e.g. converted, needs follow-up, at-risk)

Why data labeling matters: AI is only as good as the data behind it. Poorly labeled or inconsistent data leads to unreliable models, wrong classifications, and inconsistent results. High-quality, industry-specific labels make AI accurate, reliable, and usable in production.

The process of data labeling: typical steps

The data labeling process usually follows a small set of steps, whether you do it in house or with a partner like Zweelie.

1. Define the labeling schema

First you decide what to label. That means defining:

- Label set: The list of categories or tags (e.g. intents: leasing, maintenance, billing, general, emergency).

- Rules and definitions: Clear, written definitions so different labelers apply the same meaning (e.g. "leasing" = prospect asking about availability, pricing, or how to apply).

- Format and structure: How labels are stored (e.g. one intent per utterance, multiple tags per conversation, nested labels).

Without a clear schema, labelers drift, consistency drops, and the resulting dataset is noisy.

2. Source or collect the data

You need raw data to label. That can be:

- Existing interactions: Call recordings, transcripts, chat logs, or messages from your own systems.

- Synthetic or augmented data: Additional examples created to balance classes or cover edge cases.

- Industry-specific samples: Data that matches the domain (e.g. property management, legal intake, service businesses) so the model learns your language and scenarios.

Data should match how the model will be used. If the AI will see real leasing and maintenance calls, the training data should be real (or realistic) leasing and maintenance conversations.

3. Annotate or label the data

Annotation is the core of the data labeling process: humans (or human-in-the-loop systems) apply the schema to each piece of data.

- Who labels: Internal staff, contractors, or a specialized partner. Consistency and domain knowledge matter more than headcount.

- Tools and workflow: Labeling can happen in spreadsheets, custom UIs, or purpose-built annotation platforms. The workflow should make it easy to apply labels, resolve edge cases, and track progress.

- Volume and coverage: Enough examples per label so the model can learn; extra focus on rare but important classes (e.g. "emergency" or "high value") so they are not under-represented.

4. Quality control and consistency

Labeled data is only useful if it is consistent.

- Inter-annotator agreement: When multiple people label the same data, do they agree? Low agreement often means unclear definitions or a need for more training.

- Review and audit: A portion of labels is re-checked or re-labeled to catch drift and errors.

- Iteration: Refine definitions and re-label when you find systematic mistakes.

Quality control is what turns "lots of labels" into high-quality training data.

5. Output: datasets for training and evaluation

The output of the data labeling process is structured datasets:

- Training set: Used to train or fine-tune the model.

- Validation set: Used to tune hyperparameters and monitor during training.

- Test set: Used to evaluate final performance on held-out data.

Datasets are split so the model is never evaluated on data it was trained on. They are also versioned and documented so you can reproduce results and improve over time.

What is human truth?

Human truth (often called "ground truth" in ML) is the set of labels that skilled humans assign to data after reviewing it. It is the reference you use to train, evaluate, and correct AI. When we say "compare to human truth," we mean: take the labels that experienced labelers applied to a call, transcript, or message, and treat those labels as the correct answer for that example.

Human truth is not perfect. Humans can misread context, disagree at the margins, or misapply a definition. But in practice, human truth is the best standard we have. We use it to (1) evaluate how well a model performs, (2) find where the model is wrong so we can fix it or retrain, and (3) decide when to trust the AI and when to override it. Without human truth, there is no clear "right answer" to compare the AI against.

How Zweelie uses a general model and human truth

At Zweelie, we often start with a general model to get intent, without fine-tuning to a custom model first. The goal is to see what the general model already shows: which intents it assigns, where it is confident, and where it drifts or mislabels. We then test and compare that AI output to human truth and make adjustments manually.

In practice that means:

- Run a general model on your data: We use an off-the-shelf or general-purpose model to predict intent (or other labels) on your calls, transcripts, or messages. No custom model yet.

- See what the general model outputs: We inspect which labels the model assigns, where it agrees with our expectations, and where it does not.

- Build human truth: Skilled labelers assign the correct labels to the same data. That human-labeled set is our human truth.

- Compare AI to human truth: We line up the model's predictions with the human labels. Where they match, we gain confidence. Where they differ, we dig in.

- Make adjustments manually: We correct mislabels, refine definitions, and decide case by case. Sometimes we change the label (human was wrong, or we tighten the rule). Sometimes we flag the example for training or schema updates. Every change is done carefully, because neither the AI nor the human is always right.

Often we are right and sometimes wrong; the AI is not always right either. That is why the process is manual and careful. We do not auto-accept either the general model or the first pass of human labels. We compare, disagree, and adjust. Everything from schema design to final labels is done with that in mind, so the data you get is as reliable as we can make it.

How Zweelie can help with data labeling

Zweelie offers data labeling as a service so teams can get high-quality, industry-specific training data without building a labeling operation in house.

What Zweelie does

Zweelie labels and annotates industry-specific data so it can be used to train models accurately, either inside Zweelie's own systems or in your internal systems. In practice, that means:

- Schema and definitions: Working with you to define label sets and rules that match your use case (e.g. intent for property management, legal intake, or service businesses).

- Annotation: Applying those labels to your data (calls, transcripts, messages) with consistency and domain awareness.

- Quality: Quality control and consistency checks so the resulting datasets are reliable.

- Output: Structured, high-quality datasets you can use to train or evaluate models in Zweelie or elsewhere.

Who it benefits

Zweelie's data labeling service is for:

- Teams building or improving AI systems who need clean, reliable training data but do not want to hire and manage a full-time labeling team.

- Organizations in property management, service businesses, legal, trades, and similar domains who need labels that reflect real industry language and scenarios.

- Teams that already use Zweelie and want their call and message data labeled for operational intelligence, conversation classification, or custom models, plus those who only need labeled datasets for their own models.

Benefits of Zweelie's approach

Industry-specific labeling: Zweelie works with data from property management, legal intake, service businesses, and trades. Labels reflect how people actually talk (e.g. "pet-friendly apartments," "need a callback for my ticket," "quote for a full backyard") so models trained on Zweelie-labeled data perform better in those domains.

No in-house labeling ops: You get structured, high-quality datasets without hiring annotators, building labeling tools, or running QC yourself. That saves time and keeps the focus on model building and product.

Designed for AI that uses calls and messages: Data labeling at Zweelie is built for conversational data: calls, transcripts, and messages. The process and schema are aligned with intent, topic, value, and outcome, so the same labels that power Zweelie's operational intelligence and conversation classification can power your models.

Use with Zweelie or your own systems: Labeled data can feed Zweelie's Custom AI Models and Conversation Classification, or be delivered for use in your own training pipelines. You choose where the data goes.

Accuracy and reliability over time: Because Zweelie emphasizes definition clarity, consistency checks, and industry context, the resulting datasets support models that are accurate, reliable, and improvable as you add more labeled data.

Summary: data labeling process and how Zweelie helps

Data labeling is the process of preparing structured, high-quality data so AI systems can learn from it. The process of data labeling usually involves: (1) defining the schema and labels, (2) sourcing or collecting data, (3) annotating the data, (4) quality control and consistency, and (5) producing training, validation, and test datasets.

Human truth is the reference set of labels that skilled humans assign to data; it is what we compare the AI against and use to make manual adjustments. At Zweelie, we often start with a general model (no custom fine-tuning yet) to see what intent the model already shows, then test and compare that output to human truth and make adjustments manually. Often humans are right and sometimes wrong; the AI is not always right either, so everything from schema design to final labels is done carefully.

Zweelie can help by doing that process for you, with a focus on industry-specific data (property, legal, service businesses, trades) and conversational inputs (calls, transcripts, messages). You get high-quality training data without building labeling operations in house, and you can use that data in Zweelie's models or in your own systems. The benefit is better accuracy, reliability, and improvement over time for AI that depends on understanding what people say and mean in your domain.